Cutting the theatrics, keeping the security

"Security Theater" is all around us, every day. We go through its processes, without thinking about it.

It refers to all the measures that increase the feeling of security without actually doing anything to actually making things more secure.

Some examples perhaps, from Schneier's essays. For each of them, ask yourself how this is actually going to improve your security.

- Photo ID checks in office buildings

- Shoes/belt/pants removal at airports (what The New-York Times' Randall Stross calls "Mass rites of purification" )

- Boarding passes eyeballing (not electronic checking of boarding passes like it's done in certain airports, but just eyeballing them... But guess what, you are able to create fake boarding passes online), and closing down the "fake boarding passes" website, raiding the developer's apartment, instead of actually closing the loop.

- Throwing away a 5yo Buzz Lightyear toy (I don't even see how this is increasing the feeling of security...) before boarding a plane

- Having all passengers squeeze into a tight space, in large groups before going through security.

Even if a lot of this has to do with airport security (mainly because it's the most visible for everybody), we have the same kind of useless process in IT.

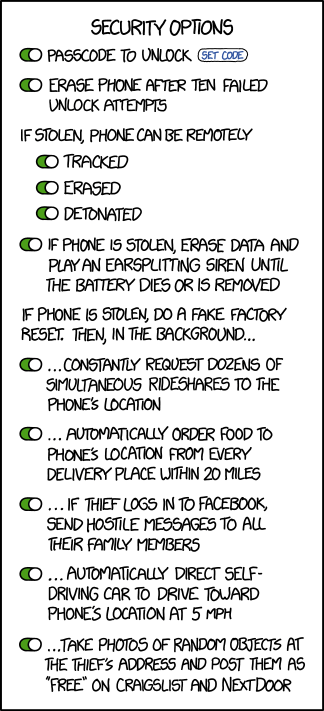

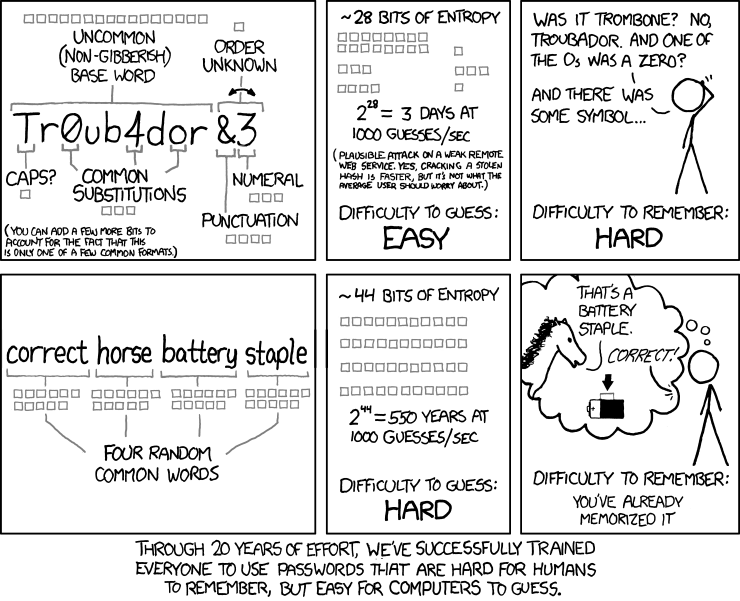

For instance, inefficient rules for passwords make it easy for computers to crack, but difficult for a human to remember:

illustration by xkcd

(As a side note, it not only applies to Randall's comic: even the person who invented these rules regrets them now.)

There's also giving everyone a password, but keeping it to default ('123456'), not asking your users to change it, and hard-coding it in your publicly available application... Yes, it's ticking the box "everyone has a password". But, in terms of increasing the security, it's falling short...

And one of my favorites is the 10-page tick-box security document: A document that you have to tick in all the right places to deploy something in a Production environment. Usually, it's written 10 years ago by someone who is not in the company anymore, where the only update is the company logo, and full of links to other documents (some of them still work).

When looking at your security procedures, you need to keep in mind a simple question: Does this actually help to be more secure, or is it a way to shift the blame to another party?

Making other parties responsible might be a good move in certain cases, but, to quote Schneier again, "If there's one general precept of security that is universally true, it is that security works best when the entity that is in the best position to mitigate the risk is responsible for that risk". This means that shifting the responsibility to a third party makes sense only when that party is able to mitigate the risk.

Playing a legal game of "it's not me, it's you" is all well when the onus is on the party that is paying for the consequences. But it does nothing to prevent the breaches from happening. And it's worse if the two fighting parties are part of the same company.

In the same vein, asking managers to validate code makes little sense. They are not in the best position to mitigate risk, and they are most likely to rely on the quality of their developers' work rather than doing a full code review (and, do you really want your company's management to devote their day reviewing thousands of code changes?). This is inefficient and detrimental to security because it shifts the burden of making secure code away from the developers. It's also true if your security team is the only party responsible for making sure the code is secure.

A good example of security theater is security through obscurity, i.e. the idea that hiding your architecture or the security measures in place will lead to a more secure system.

It's usually untrue, except for very specific cases (e.g. when you implement a honeypot, you do not want it to be known, because it would defeat the purpose, or certain procedures for remediation, the chain of commands, secrets, etc. should be kept hidden [stating the obvious]), but it is still practiced, even if this has been criticized since '51. 1851, that is, when Alfred Charles Hobbs showed the public how to pick locks...

NIST wrote, in its "Guide to General Server Security" that "Open Design—System security should not depend on the secrecy of the implementation or its components." This is truer every day because that information is likely to not stay secret forever. It might appear more secure, but, given enough time, an attacker will get that information. If your entire security relies on this, it's likely not going to be very efficient.

This is also valid for your code. 12 Factors App notes, in its "store config in the environment" that "A litmus test for whether an app has all config correctly factored out of the code is whether the codebase could be made open source at any moment, without compromising any credentials." How comfortable would you be with this?

Of course, this principle is the separation of the code and the configuration (including secrets), but it also means that your encryption algorithms, web security tokens, etc. should not be in your code (and thus not in your repositories).

What's more, sharing security best practices is an excellent way for the "good guys" to improve. Real-time sharing of threats, for instance, when used for security intelligence, allows you to find patterns and prevent attacks.

Illustration by chumworth

Imagine you need to keep something physical hidden. You put it in a safe, then the key to that safe into another safe, and the key of that safe into a cryptex, and the key to that cryptex encoded using a Jefferson cipher. Now that's super safe!

If your intention was to write a novel or a new movie, congrats!

But if you were intending to keep something safe, it might not be the best way. You are adding unnecessary complexity, and, by doing so, making the whole process less secure.

Making your code less readable, or voluntarily obfuscating the binaries, is not a good way to hide secrets unless what you are trying to hide is the algorithm itself. If it's a key embedded in the code, well... remember DRM on DVD and CD? How successful was that?

Code obfuscation might work, if the intended audience is limited, and the interest of breaking through your code is small. Otherwise, it will be hacked, reverse-engineered, and used against you.

You need to go beyond that.

Similarly, making things difficult for a potential hacker is not effective, unless as a slowing device, coupled with the appropriate tracking system. But even so, it's bad practice.

As a simple rule, complexity is the enemy of security.

Part of the problem with security is that the different actors (e.g. Network team, Ops team, Developers team, Release team, Security team, and, last but not least, users) are not working with and talking to each other, either because they don't see the point, or because they do not speak the same language.

Regardless, all these actors have an impact on security, and they all have other considerations and priorities in mind.

Security is always a trade-off. When choosing to implement it, it will have an impact on something else, features, cost, delivery speed, etc. Each of these actors will have to make that choice, and if security is not considered a critical feature, the safety of the entire system might be in jeopardy.

One of the proponents of this kind of security measure is the idea that "Fine, we might not be super-secure, but at least we have something!"

The problem with this statement is that, no, it might not be better than nothing. Badly constructed (and complex) security has two major downsides:

1) No one will challenge it because it looks like it's secure (the same way Star Trek technobabble sounds legit), and, let's face it, no one likes to touch it and be blamed if something goes wrong.

2) It directs resources on useless work, instead of focusing these resources on improving the processes, and actually increasing security.

Every time we ask a developer to sift through dozens of pages of security requirements before delivering code, we are actually asking him to either devote his time doing useless work or asking him to shoulder the risk if something goes wrong. So, the solution seems obvious: Get a tool to do it!

Frankly speaking, tools have to be part of the equation when it comes to security. Humans make mistakes, either knowingly or unknowingly, and they cannot devote the vast majority of their time to sifting through thousands of pages of new vulnerabilities every week to see if their code is still safe. For all of that, you need tools to do the hard work.

Code review done by developers does not find all the issues in the code. A study showed that, in certain cases, as low as 20% of developers were able to find a SQL injection or XSS vulnerability during code review. The same study showed that getting 80% of vulnerabilities found required 10 developers to do code reviews. To reach close to 100%, you need to double that.

Checklists have also little to no impact on code reviewing, according to this study. It might even have a negative impact, as reviewers seem to find fewer issues in code when they use checklists.

But tools won't be able, alone, to find all the issues (see this). They can usually find the simple ones, but they have a tendency to generate a lot of noise (false-positive) and miss certain flaws (false-negative).

Although tools are getting better, they need to work in conjunction with developers' training and better testing. Part of the shift left principles is to train developers to understand the implications of bad decisions on their code.

We should also keep in mind that no developer writes insecure code on purpose (hopefully). They usually do because the pressure to deliver functionalities is important, they have not been trained to secure development, they rely on a framework or tools too much, or security was never considered when the project's cost was approved. Or usually a mix of all that.

Developers need to be trained to make secure code, the same way that they need to be trained to do micro-services, write scalable software, or develop in Java. There is no reason why one is more important than the other.

Getting developers to adhere to the Rugged Manifesto, for instance, is a good way to start. But if time is not given for the developers to train, and later in the project to bake security in their architecture and code, it will be in vain.

What about GenAI?

Is it possible to write an article in 2023 without talking about GenAI?

You should put GenAI (and predictive AI) on your side. Because hackers clearly do, and you should balance the power.

There has been an increase in the number of phishing attacks in the past year. Security companies have reported a 1265% increase and liked it mainly to GenAI.

Attacks are also more complex and will last longer. They are designed to activate in places where we did not expect them before because the cost of running the phishing campaign went down drastically with GenAI.

Before GenAI, running spear-phishing (targetting a specific person and writing a text designed to be aligned with a person's habits) was very costly, and as such reserved only for specific roles (executives, accounting, etc). It's not the case anymore, and we will be seeing a large increase of personally-crafted spear-phishing e-mails in the future. And it's not only e-mails.

Current technology allows as well the creation of pictures and videos. This means you could have a very real and convincing video chat with your boss, but in reality talking to a GenAI application.

GenAI tools used for developers (like GitHub's Copilot) have filters to detect nefarious actions. However, the open-sourced models can be run on a large laptop or desktop (think gaming computer), and won't have any limitations. This can help someone creating viruses, for instance, to craft custom versions (thousands of them if they want), that would change their behavior slightly and have a different signature to bypass anti-viruses.

Not scared enough? There are other attack styles in this article from the World Economic Forum. And the hackers are usually nothing if not creative, so expect the unexpected.

So how can you get AI on your side? There are a lot of new tools that are coming up, and you can expect new ones to arrive very soon. You can, for instance, use New Relic's Grok, to have a better instrumentation, and detect issues faster.

You can create synthetic production-like data using GenAI, instead of relying on masking / tokenization of production data to test environments (just be sure to review the tool you're using in terms of data security of course. Or run the tool on your own platform), and use GenAI to create smarter test scenarios. For example, GenAI can use generative adversarial networks (GANs) to create synthetic data that preserves the statistical properties and patterns of real (production) data but does not contain any sensitive or identifiable information.

Tools like GitHub Copilot can help you to switch libraries that have a documented CVE and to change parts of the code faster. Accelerating the development can also help to react faster to zero-day attacks.

You can use GenAI to create tests for developers, to train their security and secure coding skills. This will not replace proper training, but it can be helpful to do some reminders or interview questions. The benefit is that the questions or tests will always be different, and aligned with what can happen in real life.

Also, it might not be one of your first thoughts but don't underestimate the benefit of using GenAI to write documentation or to read and summarize it. Changes in policies, regulations or long security policy documents can be problematic to read for developers. It's usually not written in a developer-friendly and lacks clarity, sometimes even for security specialists. Getting GenAI to summarize documents in a clear way or to ask a "Does this apply to me" question could be very useful. Furthermore, writing clear, concise reports about security risks or breaches will help share this with other security teams in a safe, quick, and simple way.

It's of course not only for developers, and GenAI can be used to "translate" technical documents in business terms, security, or compliance documents in something more palatable to a Product Owner.

But let's get back to actions you can take without GenAI.

First thing first: Where are you?

Gather all the data on your current situation. Start by asking these questions:

- What is my attack surface? See it, not from a network point of view, but from an application one (and think about all the SaaS you're using too)

- Do I have a CSIRP in place?

- What are the actors of my security? (Software, Hardware, and, most importantly, people).

- What is the security awareness of my developers? My users?

- Where are my secrets?

- Do I have separate code and config? How is it managed?

- What way do I have to detect an intrusion? A data leak? A horizontal escalation and lateral movement? If any of this happens, how will I react? Can I isolate the system? The user?

- What are the software suites I am currently using?

- How do I detect outdated software?

- Am I able to detect Phishing? Spear phishing?

Once you have a good view of your situation, move to the improve part of the plan.

Go through all the identified security procedures. Objectively review each of them, asking at least these three questions:

- Were they created / actually updated a long time ago? (>1y, it's likely not relevant anymore)

- How were they created? Are they relying on facts?

- Is their purpose shifting potential blame to someone else? Is it only for legal reasons?

- Can everyone understand it?

- Do they increase security?

Based on your answer, decide if they need to be reviewed properly, or discarded. If you decide to discard them, what is the impact?

And if you review them, take note of the applications that have been relying on outdated procedures as it will be necessary to talk to the application teams...

Prepare a training plan for developers. Companies can help you with this, like Secure Code Warriors.

Plan the training so that it's not a chore, but a fun, enriching experience. Being a secure code developer is a huge advantage in the market, and it will only grow. Some ideas?

- Making a brand

- Making fun events and competitions

- Giving cash prizes (the cost of a breach is way higher than the cost of cash prizes)

- Level-up program, badges

It's also very important to keep everyone up to date. Sharing security news for instance, or creating groups with everyone having a strong interest in security, could be a good way to keep up with the news.

Security is a trade-off. It has a cost.

For your applications, network, and company to be secure (and safe), or even simply compliant, there will be a need for funds.

As such, the impact of adding security needs to be understood by the stakeholders, along with the impact of not doing it.

It's important to consider security as a feature, not only as a mandatory (expensive) layer.

Let's repeat it once again: security is a system.

It's important that all actors understand each other's point of view, and that actions are coordinated. Implementing a regular workshop will all parties, or representatives of each party, is a good way to ensure that everyone is moving in the right direction.

To be effective, though, either that workshop or a separate status meeting needs to be chaired by the higher management.

It's important for everyone to understand that it's not simply a wish from the security team, but an actual push from the top of the company.

Tools are a necessary part of your security defense. But to function properly, they need to be configured and clearly coordinated, in order to prevent stuff from slipping through the net, overlapping (and getting twice the noise) or slight disjoints.

It's important to see your tools map with a holistic view. From before the development phase (proper planning, project funding, secure architecture), then development (secure repositories, separation of configuration and code, secrets management) all the way to the monitoring phase, and everything else in between (Open Source vulnerabilities, SCA, DCA, etc).

The tools you will be selecting need to give appropriate feedback to the audience they are targeting. For instance, if you are looking at an OSS vulnerability scan, integrate it in the source code editor, and ensure that it's giving messages that the developer will be able to act on. Similarly, a network engineer looking at a vulnerability report needs to see clear protocols, ports, and actions that are to be performed (Here's also another area where GenAI can help).

And of course, keep them updated. Zero day attacks do exist, but the likelihood of your system being attacked by a bot increases if you have a lot of non-patched security flaws.

CSIRP or Cyber Security Incident Response Plan. Five letters, five areas:

- Prevention,

- Detection,

- Containment,

- Remediation and

- Post Incident Response.

IBM Survey shows that, in 2017, 24% of enterprises had a CSRIP, applied consistently across the enterprise. 50% don't have one, or not a formal one.

And, out of it, the effort is mainly focused on prevention and detection, with only 4% of the efforts on post-incident response.

More effort is put into Prevention than in Containment, Remediation, and Post-Incident Response combined.

Preventing is very important. However, detection and being able to respond quickly (if possible automatically) to a breach is even more important, as 27% of companies will experience a breach each year.

Focusing on processes to apply containment and remediation quickly, and to have appropriate responses should be one of your focus.

In case of a breach, your first reaction should not be panic.

Get help.

We all love the computer genius characters in movies and series. The one that types super fast on a keyboard and announces proudly "I'm in!" or "I've blocked them for now!". However, the reality is unfortunately different (and has less nerve-wracking music :) ).

It's more likely you will need a team and some help from external people to build a strong security strategy and implement it. And another set of brains and hands to keep it up to date.

Your security team needs to be learning and attending conferences regularly, and constantly sharing with others what they have discovered. They need to participate in communities, meetups, and other places where security teams meet. I would also suggest to be present at developers conferences because a lot of them have a least a few talks focused on security nowadays.

I'm personally very fond of the infosec.exchange community, where a lot of great people share what's happening in the cyber security domain.

Security is a journey that never ends

There is no end to security. Reviewing your procedures should be done on a regular basis. Training developers, raising awareness, and staying up to date are all eternal aspects of staying secure.

You will never get to a stage where you can say "We're done with security, we're perfect!". Someone will effectively prove you wrong.

It's exciting work, and it's here to stay.