Often, we face a scenario with multi-tenant applications where we need to provide access to different folders inside an S3 bucket to different tenants. An obvious approach to achieve this is to create different IAM roles for every tenant with permission to access a particular folder only. The drawback of this approach is that you need to create and manage a lot of roles. But there is a way to solve this problem and the solution is S3 Access Point.

With S3 Access Point, you can restrict access to folders inside the S3 bucket, without creating multiple IAM users/roles. Let's see how to set this up so that we can access different folders of the S3 bucket from an EC2 instance, based on the S3 Access Point we use.

Note: We have used the us-east-1 AWS region. If you are using a different region, then make sure the necessary changes to the resources’ ARN.

Step 1: Create an IAM role named tenant for the EC2 instance with the following policy.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::athena1989/folder1/*",

"arn:aws:s3:::athena1989/folder1",

"arn:aws:s3:::athena1989/folder2/*",

"arn:aws:s3:::athena1989/folder2"

]

}

]

}

Here athena1989 is the S3 bucket name. This policy allows this IAM role to read/write objects under folder1 and folder2 of the S3 bucket athena1989.

Step 2: Now add a bucket policy like this to the athena1989 bucket. Here 'tenant' is the role name we created in Step 1. This policy denies the tenant role to access the S3 bucket unless it is accessed via an S3 access point.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Principal": {

"AWS": "arn:aws:iam::<AWS_ACCOUNT_ID>:role/tenant"

},

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::athena1989",

"arn:aws:s3:::athena1989/*"

],

"Condition": {

"StringNotLike": {

"s3:DataAccessPointArn": "arn:aws:s3:us-east-1:

<AWS_ACCOUNT_ID>:accesspoint/*"

}

}

}

]

}

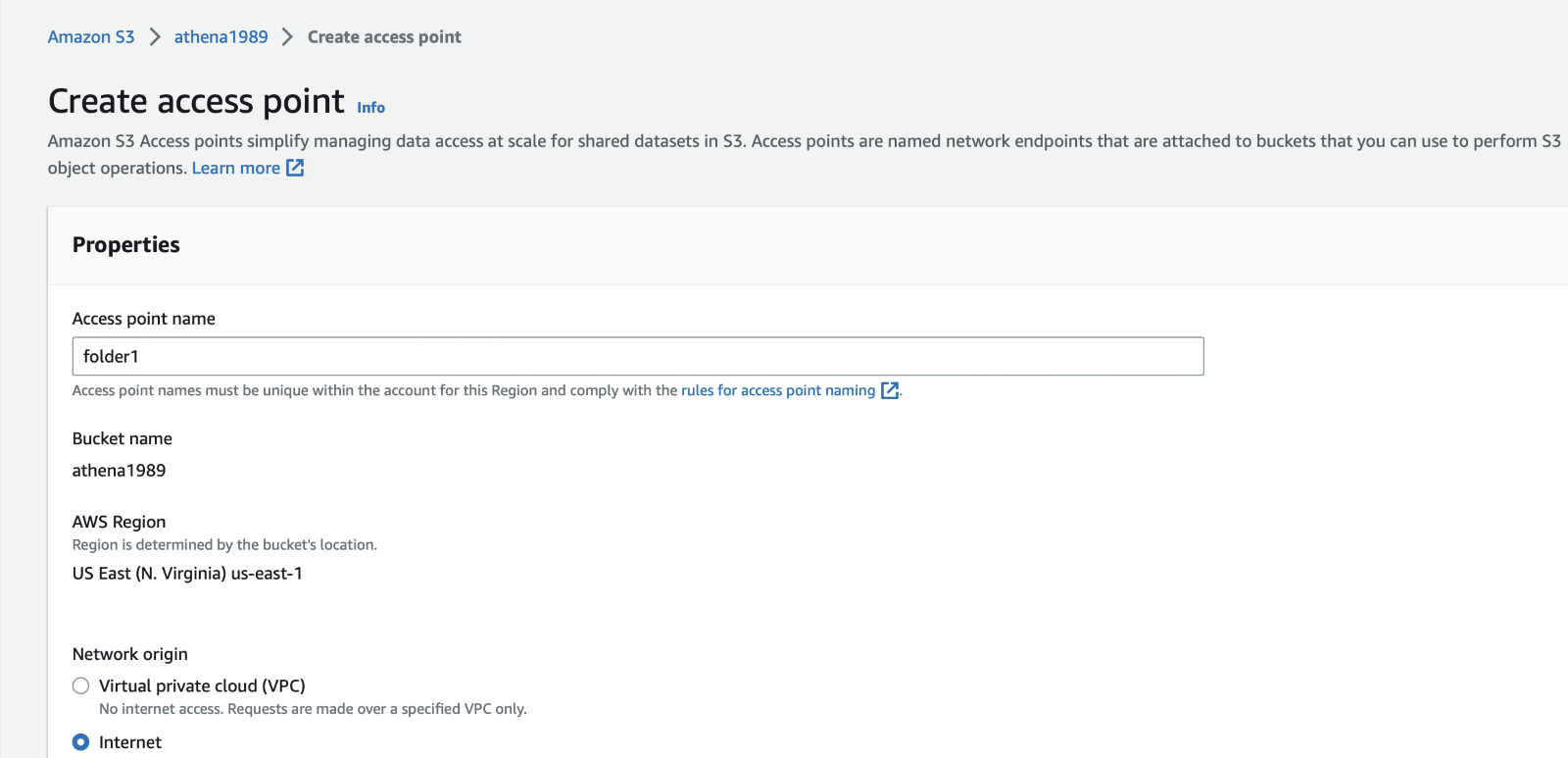

Step 3: Now create an S3 access point named folder1 of type Internet with public access blocked.

You may also choose the access point type as VPC if you want to further restrict access to your bucket. Add the following Access Point policy to this access point.

{

"Version": "2008-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<AWS_ACCOUNT_ID>:role/tenant"

},

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": "arn:aws:s3:us-east-1:

<AWS_ACCOUNT_ID>:accesspoint/folder1/object/folder1/*"

}

]

}

This policy allows the tenant role to read/write objects in the folder1 folder of the S3 bucket.

Step 4: Repeat Step 3 and create another S3 access point named folder2 of type Internet with public access blocked. Add the following Access Point policy.

{

"Version": "2008-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<AWS_ACCOUNT_ID>:role/tenant"

},

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": "arn:aws:s3:us-east-1:

<AWS_ACCOUNT_ID>:accesspoint/folder2/object/folder2/*"

}

]

}

This policy allows the tenant role to read/write objects in the folder2 folder of the S3 bucket.

Step 5: We are done with the setup and it is time for some testing. For that, launch an EC2 instance with ‘tenant’ role attached to it and execute the following commands and check the execution results:

echo “Test” > package.txt

aws s3 cp package.txt s3://athena1989/folder1/

aws s3 cp package.txt s3://athena1989/folder2/

You will get Access Denied since the tenant role is not allowed to access the S3 bucket directly.

aws s3api put-object --body package.txt --bucket arn:aws:s3:us-east-1:

<AWS_ACCOUNT_ID>:accesspoint/folder1 --key folder1/package.txt

The command will be executed successfully since the tenant role has access to the folder1 access point and that access point can read/write to the folder1 folder.

aws s3api put-object --body package.txt--bucket arn:aws:s3:us-east-1:

<AWS_ACCOUNT_ID>:accesspoint/folder1 --key folder2/package.txt

You will get Access denied because even though the tenant role has access to the folder1 access point, that access point can't read/write to the folder2 folder.

aws s3api put-object --body package.txt --bucket arn:aws:s3:us-east-1:

<AWS_ACCOUNT_ID>:accesspoint/folder2 --key folder2/package.txt

The command will be executed successfully since the tenant role has access to the folder2 access point and that access point can read/write to the folder2 folder.

aws s3api put-object --body package.txt --bucket arn:aws:s3:us-east-1:

<AWS_ACCOUNT_ID>:accesspoint/folder2 --key folder1/package.txt

You will get Access denied because even though the tenant role has access to the folder2 access point, that access point can't read/write to the folder1 folder.

Conclusion

S3 Access Points provide an easier and more granular way to manage access to S3 buckets, improve overall data management processes, and allow us to grant access to only the necessary resources and reduce the risk of misconfiguration or access mistakes.

For further information, you may refer to Access Point Documentation.